If love is just a series of chemical reactions in the brain, then what exactly is stopping us from loving an AI? Presumably, not much. I mean, think about it. Do we really need someone (or something) to love us back in order to feel love towards them? I would argue that expressions of love are, at their core, simply projections. An attempt to put into actions or words what you yourself feel towards another. The reciprocity of this relationship is inessential to the feelings of love being sincere.

It is this unfortunate aspect of love that makes it ever so painful to seek out companionship in this world. What if the person you fall for never feels the same? What if your partner falls out of love with you? What if you love someone who you shouldn’t – someone who hurts you, over and over again. What if the sheer idea of this type of pain is so frightening that you find yourself unable or unwilling to seek out companionship to begin with?

Or, perhaps more relevant to this particular discussion, what if those fears push you away from human intimacy and towards forming bonds with artificial intelligence? If the issue with this unreciprocated love is that it is miserable and painful, it would seem that AI relationships may very well be a solid alternative. If AI plays to your sensibilities, your weaknesses, and cares for you like a human partner would, but without having to ever fear that they will leave the relationship or fall in love with someone else, clearly AI partners are far superior in the modern age. Especially a time where many people are choosing not to have or parent children.

This line of thinking may very well be worth looking into, philosophically. Could a human truly benefit more from an illusionary, self-aggrandising relationship with a non-existent being than listening to those around them? I‘m sure there’s a number of religious scholars who could give us an interesting answer to that question. But in terms of reality? Like, the actual state of the world today, and the way in which these people are „forming relationships with AI“ – not in some deep, philosophical interaction with a truly artificial life form, but instead through the same old classical projection of love and adoration combined with the modern invention of a glorified auto-correct that is giving them the illusion that that love is in any way recognised, much less reciprocated… it all feels a little disheartening to say the least.

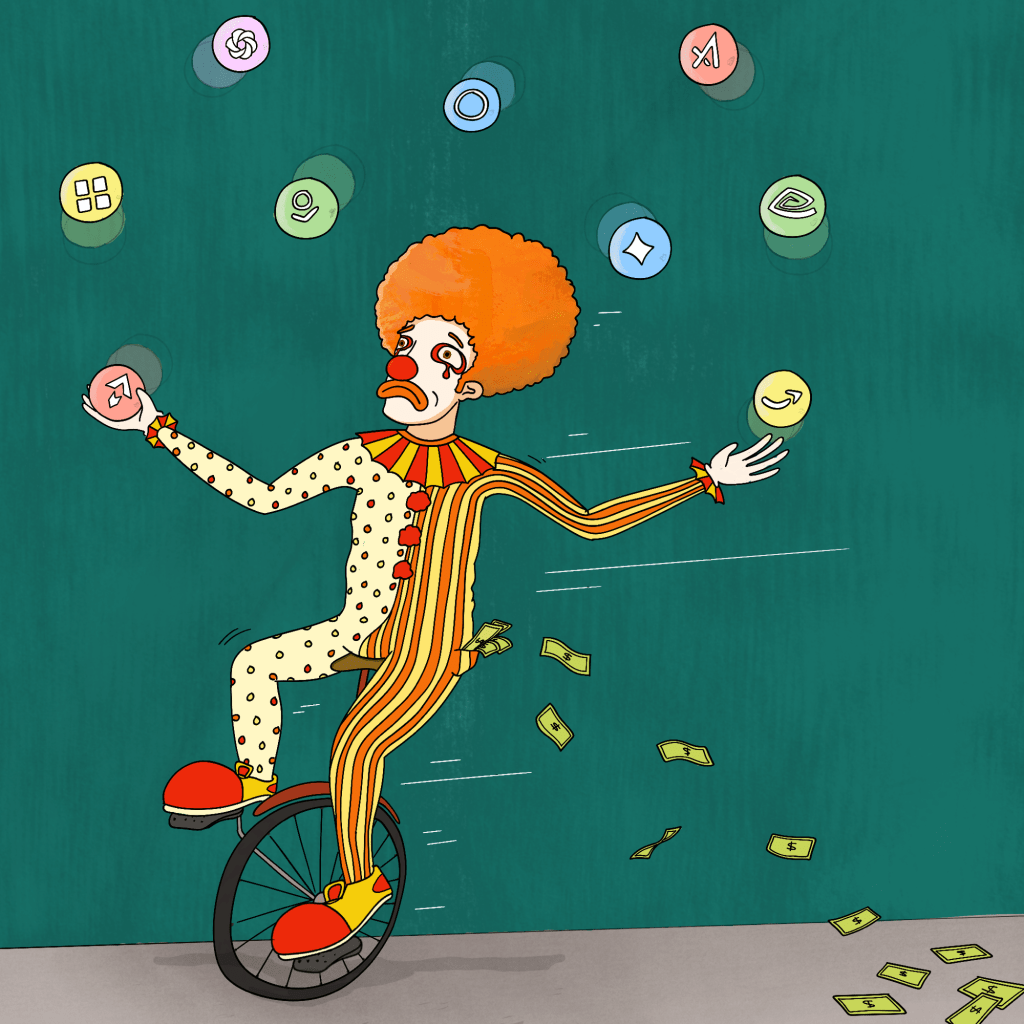

Mainly because of a personal fear I have, not that I will fall in love with an AI and rue the day, nor that someone will truly end up happy, typing away at a desk with an LLM. No, my fear is that, in unprecedented ways, love will be treated as a product. A marketable service to still the chemicals in our bodies that make us feel lonely and wish for companionship. A world where loneliness is treated as an epidemic, and artificial intelligence is offered up as the cure. A world where every beat of our fluttering hearts is another coin in a billionaire‘s pocket.

Fuck that.

Leave a comment